Audialab has just released Humanize Alpha, a plugin employing the company’s AI-powered Deep Sampling technology to humanize drum samples. We’re taking a closer look at Humanize and giving away a FREE copy to one lucky BPB reader.

Previously, Audialab has brought us Emergent Drums, a groundbreaking application to generate infinite, royalty-free drum samples using AI.

The Humanize plugin shares the same engine to do what you’d expect by its name: humanizing drum samples.

However, let’s contextualize this phenomenon before moving on.

Our brain needs to hear variations over time, even if imperceptible, to keep it interested in what it listens to. Drummers in the real world never hit the snare, for instance, the same every time.

Instead, there will be micro-variations in pitch, timing, dynamics, and timbre. And that’s why we feel real drums are more natural than beats made with electronic drum machines.

During music production contexts, I’d usually make automation over some parameters like volume, pitch, and some effects to make sure my drum samples keep varying over time.

Alternatively, I would create a drum rack (I’m an Ableton user) with multiple variations of the same sample loaded and employ some randomization MIDI processes to trigger them casually to create a round-robin sample playback.

In either case, it’s quite a tedious process, honestly, and that’s where a plugin like Humanize may come to help us.

I tried Audialab Humanize on a set of sampled drum machine sounds and was pleased by its simplicity and effectiveness.

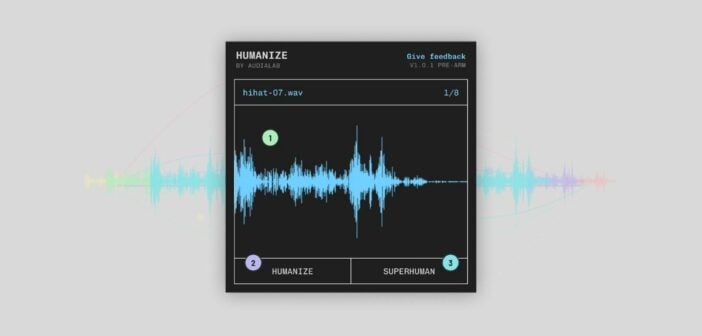

The workflow is straightforward. You just need to drag a drum sample into the plugin window to get started.

Then you have two options: Humanize & Superhuman.

The first creates realistic variations over the sample loaded, while the latter increases the variance for more unpredictability.

After clicking on one of the two options, you have to wait a bit (around 30 seconds) so that it can generate variations of the loaded sample (note that you need to have an internet connection for the plugin to work).

Once the process is completed, click on the sample display or trigger the device via MIDI, and you have a round-robin effect!

Audialab Humanize generated around 8-9 variations during my tests, enough to do the trick. It created subtle modifications over the initial sample’s timbre; typically, one or more variations had an added reverse noise tail at the end.

If you want to avoid the machine-gun effect of robotic drums that sound always the same, then this is a plugin you want to check out. Although the software can improve, it is still pretty good!

Early adopters can access the private Discord server to get direct support from the developer team, which is great for exchanging feedback.

More info: Humanize Alpha

The Giveaway

Audialab kindly offers one FREE copy of Humanize to one lucky BPB reader.

To enter the giveaway, please answer the following question in the comments section below: What would be an ideal AI-powered VST plugin?

We will randomly pick the winning comment on October 25, 2023.

Good luck, everyone, and thank you for reading BPB!

The winner is: frank reitz

Congratulations, Frank (we are reaching out via email)! :)

Everyone else, thank you for joining, and better luck next time!

More:

239 Comments

Eli

onI think the ideal AI-plug in would be something like chat-GPT where I could enter a prompt and it would return audio / midi… for example “give me a piano chord progression and topline in the style of Mac Miller’s 2009” or “give me a finger-picking guitar progression in the key of F#m”

MICHAEL CRAWFORD

onAn AI plugin that can analyze audio and create a matching rhythm and groove

CHRIS

onAn AI plugin that can easily analyse elements from a drum bus track and allows to add triggered layers with some generated (from scratch or from reference sample) variations with velocity matching.

Monochrome

onThe ideal AI-Powered plugin would be a self-tagging/organizing sample library

James Brohard

onWhat would be an ideal AI-powered VST plugin?

An AI-powered VST plugin that could make an entire song.

It could either interview the user with questions designed to determine what kind of song, tempo, instruments, and other musical variables the user wants in the song, and then create it, or it could generate one based on the user’s choice in drop down menus and once all the drop-down menus have been answered, then create the song. Then after creating the song, it would allow the user to tweak it/fine tune it until the final song is exactly what the user wants.

PHoTRoN

onYou gonna pick a winner?